Deep Learning

Understanding Deep Learning

Deep learning is a specialized area within the broader field of machine learning, which itself is a subset of artificial intelligence (AI). It focuses on using multilayered neural networks to analyze and interpret complex data. This approach is inspired by the structure and function of the human brain, where interconnected neurons process information. By stacking artificial neurons into layers, deep learning models can learn to perform tasks such as classification, regression, and representation learning.

Core Concepts of Deep Learning

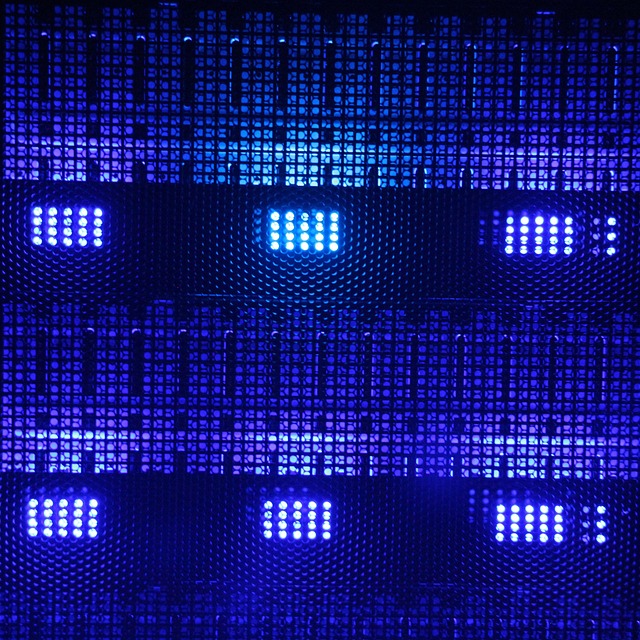

At its core, deep learning revolves around the concept of neural networks. These networks consist of layers of nodes, or neurons, that process input data. Each layer transforms the data in some way, allowing the model to learn increasingly abstract representations. The training process involves adjusting the connections between these neurons based on the data they process, enabling the model to improve its performance over time.

Common Architectures in Deep Learning

There are several key architectures commonly used in deep learning:

- Fully Connected Networks: Every neuron in one layer is connected to every neuron in the next layer. This structure is straightforward but can become computationally expensive with large datasets.

- Convolutional Neural Networks (CNNs): Primarily used for image processing, CNNs utilize convolutional layers to automatically detect features in images, making them highly effective for tasks like image recognition.

- Recurrent Neural Networks (RNNs): Designed for sequential data, RNNs are particularly useful in natural language processing and time series analysis, as they can maintain information across sequences.

- Generative Adversarial Networks (GANs): These consist of two networks, a generator and a discriminator, that compete against each other. GANs are often used for generating realistic images and other data types.

- Transformers: A newer architecture that has gained popularity for tasks involving language, transformers rely on self-attention mechanisms to process data more efficiently than traditional RNNs.

Applications of Deep Learning

Deep learning has found applications across various domains, including:

- Healthcare: Analyzing medical images, predicting patient outcomes, and personalizing treatment plans.

- Finance: Fraud detection, algorithmic trading, and risk assessment.

- Autonomous Vehicles: Enabling self-driving cars to interpret their surroundings and make decisions.

- Natural Language Processing: Powering chatbots, translation services, and sentiment analysis.

Challenges and Considerations

Despite its potential, deep learning also presents several challenges:

- Data Requirements: Deep learning models typically require large amounts of data to train effectively, which can be a barrier in some fields.

- Computational Resources: Training deep learning models can be resource-intensive, often necessitating specialized hardware like GPUs.

- Interpretability: The complexity of deep learning models can make it difficult to understand how they arrive at specific decisions, raising concerns in critical applications.

The Future of Deep Learning

As technology continues to evolve, deep learning is expected to play an increasingly significant role in various industries. Ongoing research aims to improve model efficiency, reduce data requirements, and enhance interpretability. The integration of deep learning with other AI technologies could lead to even more advanced applications, further transforming how we interact with machines and data.

Conclusion

Deep learning represents a powerful tool in the AI toolkit, enabling machines to learn from data in ways that mimic human cognition. While it comes with challenges, its applications are vast and growing, making it a critical area of study and development in the field of artificial intelligence.

Rental Repairs and Maintenance Tax Deductions

Rental Repairs and Maintenance Tax Deductions

Health

Health  Fitness

Fitness  Lifestyle

Lifestyle  Tech

Tech  Travel

Travel  Food

Food  Education

Education  Parenting

Parenting  Career & Work

Career & Work  Hobbies

Hobbies  Wellness

Wellness  Beauty

Beauty  Cars

Cars  Art

Art  Science

Science  Culture

Culture  Books

Books  Music

Music  Movies

Movies  Gaming

Gaming  Sports

Sports  Nature

Nature  Home & Garden

Home & Garden  Business & Finance

Business & Finance  Relationships

Relationships  Pets

Pets  Shopping

Shopping  Mindset & Inspiration

Mindset & Inspiration  Environment

Environment  Gadgets

Gadgets  Politics

Politics