Neural Networks

Introduction to Neural Networks

Neural networks are a subset of artificial intelligence (AI) and machine learning that are designed to recognize patterns and make decisions based on data. They are inspired by the biological neural networks that constitute animal brains. The fundamental unit of a neural network is the artificial neuron, which mimics the behavior of biological neurons. This article explores the structure, functioning, and applications of neural networks.

Structure of Neural Networks

A neural network consists of interconnected nodes, or artificial neurons, organized in layers. The architecture typically includes three types of layers:

- Input Layer: This is the first layer that receives the input data. Each neuron in this layer represents a feature of the input.

- Hidden Layers: These are intermediate layers where the actual processing occurs. A network is classified as a deep neural network if it contains two or more hidden layers. Each hidden layer transforms the input data into a more abstract representation.

- Output Layer: This is the final layer that produces the output of the network, which can be a classification, a prediction, or any other form of result based on the processed data.

How Neural Networks Work

The functioning of neural networks is based on the principle of signal propagation and weight adjustment. When data is fed into the input layer, it is processed through the hidden layers, where each neuron applies a mathematical function to the inputs it receives. The output from each neuron is then passed to the next layer until it reaches the output layer.

During the training phase, neural networks learn from labeled datasets. This process involves adjusting the weights of the connections between neurons to minimize the difference between the predicted output and the actual target values. This optimization is typically achieved through a method known as empirical risk minimization, which aims to reduce the empirical risk associated with the predictions made by the network.

Training Neural Networks

Training a neural network involves several key steps:

- Data Preparation: The first step is to prepare the dataset, which includes cleaning, normalizing, and splitting the data into training and testing sets.

- Forward Propagation: During this phase, the input data is passed through the network, and the output is computed based on the current weights.

- Loss Calculation: The loss function measures the difference between the predicted output and the actual target values. Common loss functions include mean squared error for regression tasks and cross-entropy for classification tasks.

- Backward Propagation: This step involves calculating the gradients of the loss function with respect to the weights and updating the weights to minimize the loss.

- Iteration: The process of forward propagation, loss calculation, and backward propagation is repeated for multiple epochs until the network converges to an optimal set of weights.

Applications of Neural Networks

Neural networks have a wide range of applications across various fields:

- Image Recognition: Neural networks are extensively used in computer vision tasks, such as facial recognition and object detection.

- Natural Language Processing: They play a crucial role in understanding and generating human language, powering applications like chatbots and translation services.

- Healthcare: Neural networks assist in diagnosing diseases, predicting patient outcomes, and personalizing treatment plans.

- Finance: In the financial sector, they are employed for fraud detection, algorithmic trading, and risk assessment.

- Autonomous Vehicles: Neural networks enable self-driving cars to interpret sensory data and make real-time driving decisions.

Challenges and Future Directions

Despite their effectiveness, neural networks face several challenges. One significant issue is the requirement for large amounts of labeled data for training, which can be resource-intensive. Additionally, neural networks can be prone to overfitting, where they perform well on training data but poorly on unseen data. Techniques such as dropout, regularization, and data augmentation are employed to mitigate these issues.

Looking ahead, the future of neural networks is promising. Ongoing research aims to develop more efficient architectures, improve interpretability, and enhance the ability to learn from smaller datasets. Furthermore, advancements in hardware, such as specialized processors for deep learning, are expected to accelerate the deployment of neural networks in real-world applications.

Conclusion

Neural networks represent a powerful tool in the field of artificial intelligence, enabling machines to learn from data and make informed decisions. As technology continues to evolve, the potential applications and capabilities of neural networks are likely to expand, making them an integral part of future innovations in various domains.

Thanksgiving Dining in Greenville: A Culinary Escape

Thanksgiving Dining in Greenville: A Culinary Escape

Health

Health  Fitness

Fitness  Lifestyle

Lifestyle  Tech

Tech  Travel

Travel  Food

Food  Education

Education  Parenting

Parenting  Career & Work

Career & Work  Hobbies

Hobbies  Wellness

Wellness  Beauty

Beauty  Cars

Cars  Art

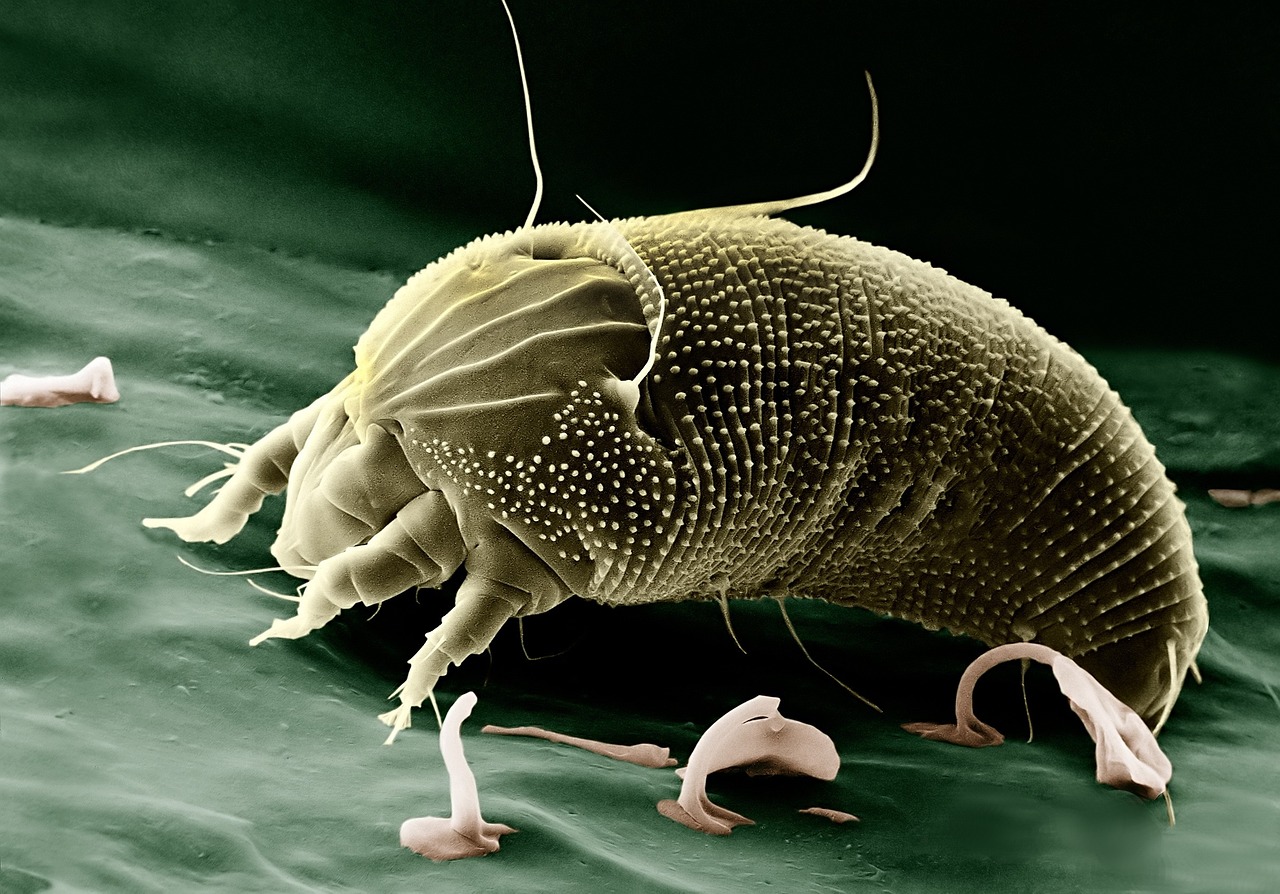

Art  Science

Science  Culture

Culture  Books

Books  Music

Music  Movies

Movies  Gaming

Gaming  Sports

Sports  Nature

Nature  Home & Garden

Home & Garden  Business & Finance

Business & Finance  Relationships

Relationships  Pets

Pets  Shopping

Shopping  Mindset & Inspiration

Mindset & Inspiration  Environment

Environment  Gadgets

Gadgets  Politics

Politics