State Space Models For Event Cameras

Introduction

The evolution of computer vision has been significantly influenced by the advent of event cameras, which capture visual information in a fundamentally different manner compared to traditional frame-based cameras. Event cameras operate by recording changes in the scene asynchronously, allowing for high temporal resolution and low latency. However, the challenge lies in effectively processing the data generated by these cameras, particularly when it comes to generalizability across varying temporal windows. This article delves into the application of state-space models (SSMs) in enhancing the performance of event cameras.

Understanding Event Cameras

Event cameras, such as the Dynamic Vision Sensor (DVS), capture events based on changes in brightness, producing a stream of asynchronous events rather than a sequence of frames. This unique approach allows for superior performance in dynamic environments, particularly in scenarios with rapid motion or varying lighting conditions. However, the data generated can be complex and requires sophisticated models for effective interpretation.

The Limitations of Traditional Approaches

Current methodologies for processing event camera data often involve converting temporal windows of events into dense, grid-like representations. While this approach has shown promise, it exhibits significant limitations in terms of generalizability. Specifically, models trained on specific temporal windows may struggle when deployed at higher inference frequencies, leading to suboptimal performance. This limitation underscores the need for more adaptable and robust modeling techniques.

Introducing State-Space Models

State-space models offer a compelling solution to the challenges posed by event camera data. By incorporating learnable timescale parameters, SSMs can effectively adapt to varying temporal dynamics inherent in the data. This adaptability allows for improved performance across different inference frequencies, enhancing the model's ability to generalize beyond its training conditions.

Key Features of State-Space Models

State-space models are characterized by their ability to represent dynamic systems through a set of equations that describe the state of the system over time. The key features of SSMs include:

- Flexibility: SSMs can be tailored to accommodate different temporal dynamics, making them suitable for a wide range of applications.

- Scalability: These models can efficiently handle large datasets, which is crucial given the high volume of data generated by event cameras.

- Robustness: By learning from the data, SSMs can maintain performance even in challenging conditions, such as rapid motion or occlusions.

- Integration with Deep Learning: SSMs can be seamlessly integrated with deep learning frameworks, enhancing their applicability in modern computer vision tasks.

Applications of State-Space Models in Event-Based Vision

The integration of state-space models into event-based vision systems opens up new avenues for research and application. Some notable applications include:

- Object Detection: SSMs can improve the accuracy and speed of object detection algorithms by effectively processing the asynchronous data from event cameras.

- Motion Tracking: The adaptability of SSMs allows for precise motion tracking in dynamic environments, which is essential for applications in robotics and autonomous vehicles.

- Gesture Recognition: Enhanced processing capabilities enable more accurate gesture recognition, paving the way for advancements in human-computer interaction.

- Surveillance Systems: The robustness of SSMs makes them ideal for surveillance applications, where real-time processing of event data is critical.

Challenges and Future Directions

While the application of state-space models in event cameras presents numerous advantages, several challenges remain. The complexity of the models can lead to increased computational demands, necessitating further optimization. Additionally, the integration of SSMs with existing systems requires careful consideration to ensure compatibility and efficiency.

Future research should focus on refining the algorithms used in state-space models, exploring novel architectures that can further enhance performance. Additionally, expanding the applicability of SSMs to other domains within computer vision could yield significant advancements.

Conclusion

State-space models represent a significant advancement in the processing of event camera data, addressing key limitations of traditional approaches. By leveraging their adaptability and robustness, SSMs can enhance the performance of various applications in event-based vision. As research in this field continues to evolve, the potential for state-space models to transform computer vision remains substantial.

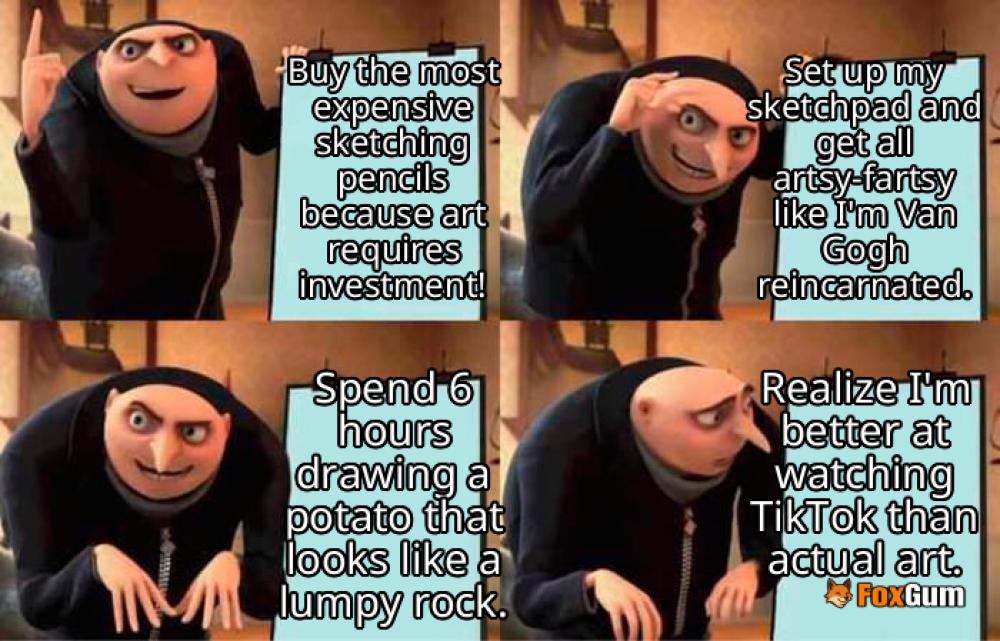

Unleash Your Inner Picasso with Sketching Pencils!

Unleash Your Inner Picasso with Sketching Pencils!

Health

Health  Fitness

Fitness  Lifestyle

Lifestyle  Tech

Tech  Travel

Travel  Food

Food  Education

Education  Parenting

Parenting  Career & Work

Career & Work  Hobbies

Hobbies  Wellness

Wellness  Beauty

Beauty  Cars

Cars  Art

Art  Science

Science  Culture

Culture  Books

Books  Music

Music  Movies

Movies  Gaming

Gaming  Sports

Sports  Nature

Nature  Home & Garden

Home & Garden  Business & Finance

Business & Finance  Relationships

Relationships  Pets

Pets  Shopping

Shopping  Mindset & Inspiration

Mindset & Inspiration  Environment

Environment  Gadgets

Gadgets  Politics

Politics